FlexPod MetroCluster IP Solutions

Jyh-shing Chen

This technical blog presents an overview of the FlexPod® Datacenter platform, highlighting NetApp® ONTAP® 9.6 MetroCluster™ IP (Internet Protocol) features and capabilities. It also discusses an example FlexPod MetroCluster IP solution configuration that companies can adopt to create a flexible, scalable, and highly available converged infrastructure in which the MetroCluster storage clusters can be separated by up to 700 km. This infrastructure can protect business-critical data services against single-site outages and help to achieve business continuity objectives.

Data Accessibility: The Key to Business Success

As a business grows, the availability of its data services becomes increasingly crucial to its continued success, because data accessibility is key to meeting business objectives and serving customers. A FlexPod converged infrastructure solution from NetApp and Cisco gives companies a highly flexible and scalable platform to ensure the availability of their business-critical data services.

With ONTAP MetroCluster, data from one ONTAP storage cluster can be mirrored synchronously to another ONTAP storage cluster located at a different physical site. When one of the sites encounters a problem, such as a power outage or other type of site disaster that impacts its IT infrastructure, the operations can be switched over to use the data from the alternate site to ensure business continuity.

With ONTAP 9.6, customers have increased flexibility in how they can take advantage of FlexPod MetroCluster IP solutions. For example, a full range of AFF and FAS systems are supported and a shared layer 2 inter-switch link (ISL) can be used to deploy a FlexPod MetroCluster IP solution. In addition, customers can choose to implement the solution by considering a wider range of possible site choices, from adjacent sites on a campus to having them be geographically separated by up to 700 km. Operations are further simplified by the automatic healing enhancement for the MetroCluster IP solution, which reduces the number of steps to restore to normal operations from a site outage or disaster.

About the FlexPod Solutions

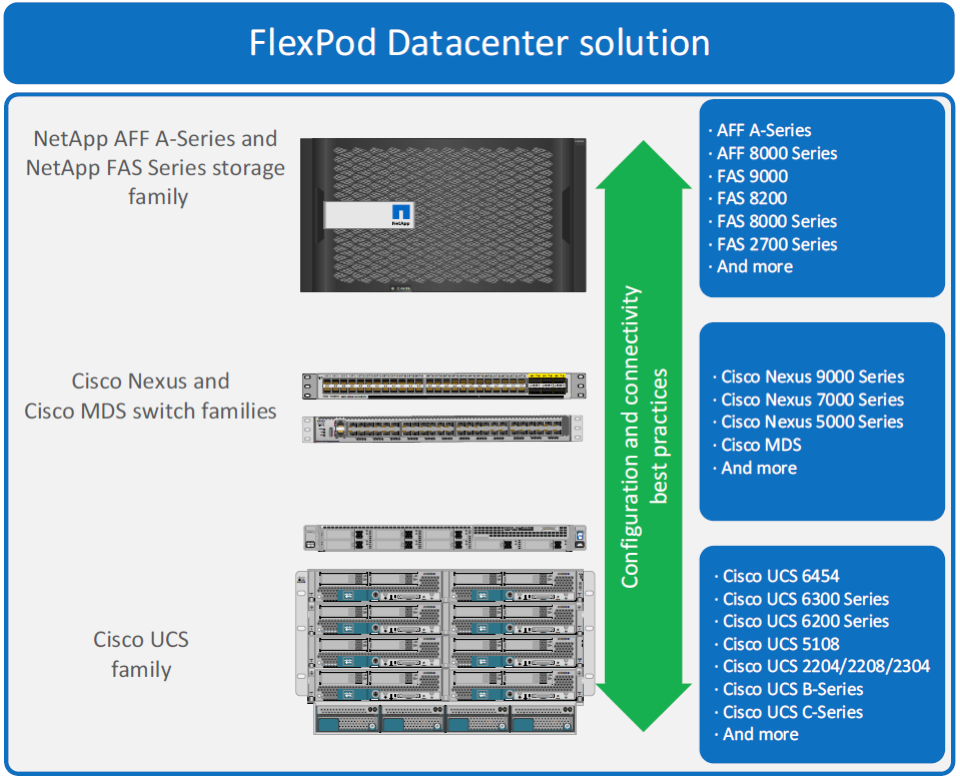

FlexPod Datacenter is built with the Cisco Unified Computing System (UCS) family, the Cisco Nexus and MDS family of switches, and NetApp AFF and FAS storage arrays.

FlexPod Datacenter is a massively scalable architecture platform for enterprise applications and business-critical workloads such as databases, virtual desktop infrastructures (VDI), artificial intelligence, and machine learning. The flexibility and scalability of FlexPod solutions make them especially suitable for private and hybrid cloud infrastructure implementations which vary in scale depending on specific solution requirements. For full information about the FlexPod Datacenter platform, refer to the FlexPod Datacenter Technical Specifications.

Figure 1 shows some of the FlexPod Datacenter solution family components that can be used in solution design and implementation. To increase performance and capacity, a FlexPod solution can be scaled up to include more compute, network, or storage resources. It can also be scaled out by deploying multiple FlexPod systems.

Figure 1) FlexPod Datacenter Solution component families.

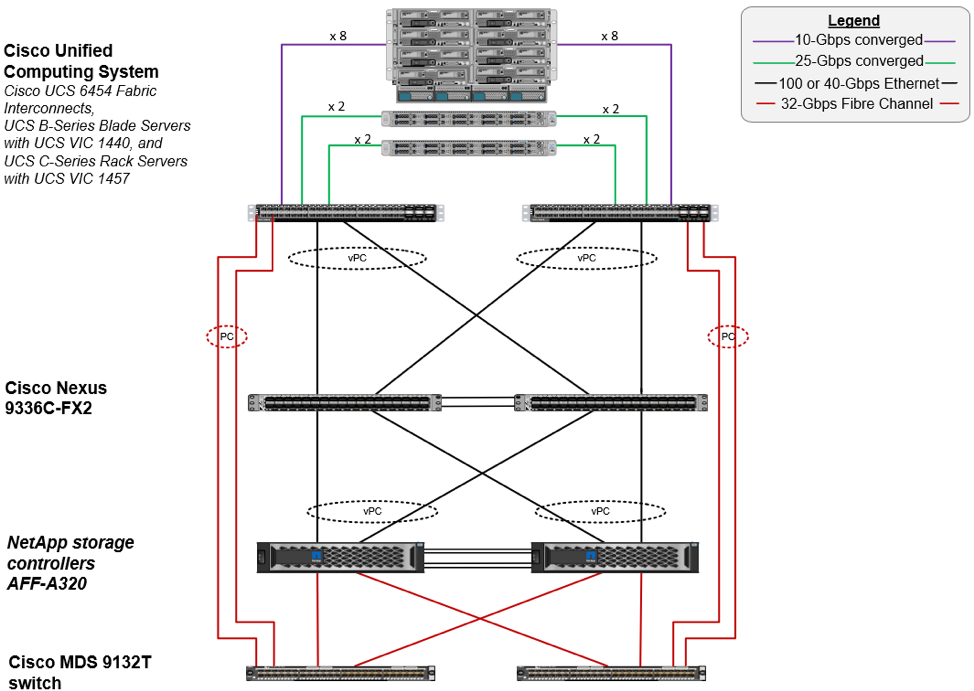

Figure 2 shows an example FlexPod Datacenter topology. This Cisco Validated Design with VMware vSphere 6.7U2 is deployed on top of a FlexPod design consisting of the Cisco fourth-generation Fabric Interconnect, UCS B-Series blade servers, UCS C-Series rack servers, Nexus and MDS switches, and NetApp AFF A320 storage controllers.

Figure 2) FlexPod Datacenter topology with VMware vSphere 6.7U2, Cisco fourth-generation UCS, and NetApp® AFF A320.

About ONTAP MetroCluster

ONTAP MetroCluster is an ONTAP feature that customers can use to synchronously mirror stored data from one site to another. The sites are typically separated to help protect the solution against single-site power loss, other types of disaster, and hardware failures. The ONTAP MetroCluster solution improves the availability of the data services and ensures business continuity.

The ONTAP MetroCluster solution has a zero recovery point objective (RPO) and a low recovery time objective (RTO) to ensure that no data is lost as a result of a single-site failure and that the data services can be quickly switched over to the surviving site for business continuity.

Synchronous Data Replication

When the two physically and geographically separated ONTAP clusters are configured to work together as an ONTAP MetroCluster solution, the data written to either of the ONTAP clusters is automatically synchronously mirrored to the other site for every I/O request. The data replication happens “under the hood” of the ONTAP software and therefore is transparent to the clients performing the I/O.

To accommodate and mirror the data coming from the other site, the storage in the ONTAP clusters is partitioned to store data both locally and remotely with a mirrored aggregate configuration consisting of two disk pools, pool 0 for local storage and pool 1 for remote storage. Because the client data is synchronized at both sites, data can be served from the remote site if the situation requires it, such as in a simulated testing scenario or when a real disaster occurs.

In addition to synchronously replicating the client I/O data, the configuration details of the ONTAP MetroCluster IP solution itself are also replicated to the other site so that the two sites stay in sync from the perspectives of both stored data and configuration.

Solution Configurations, Site Connectivity, and Distance Support

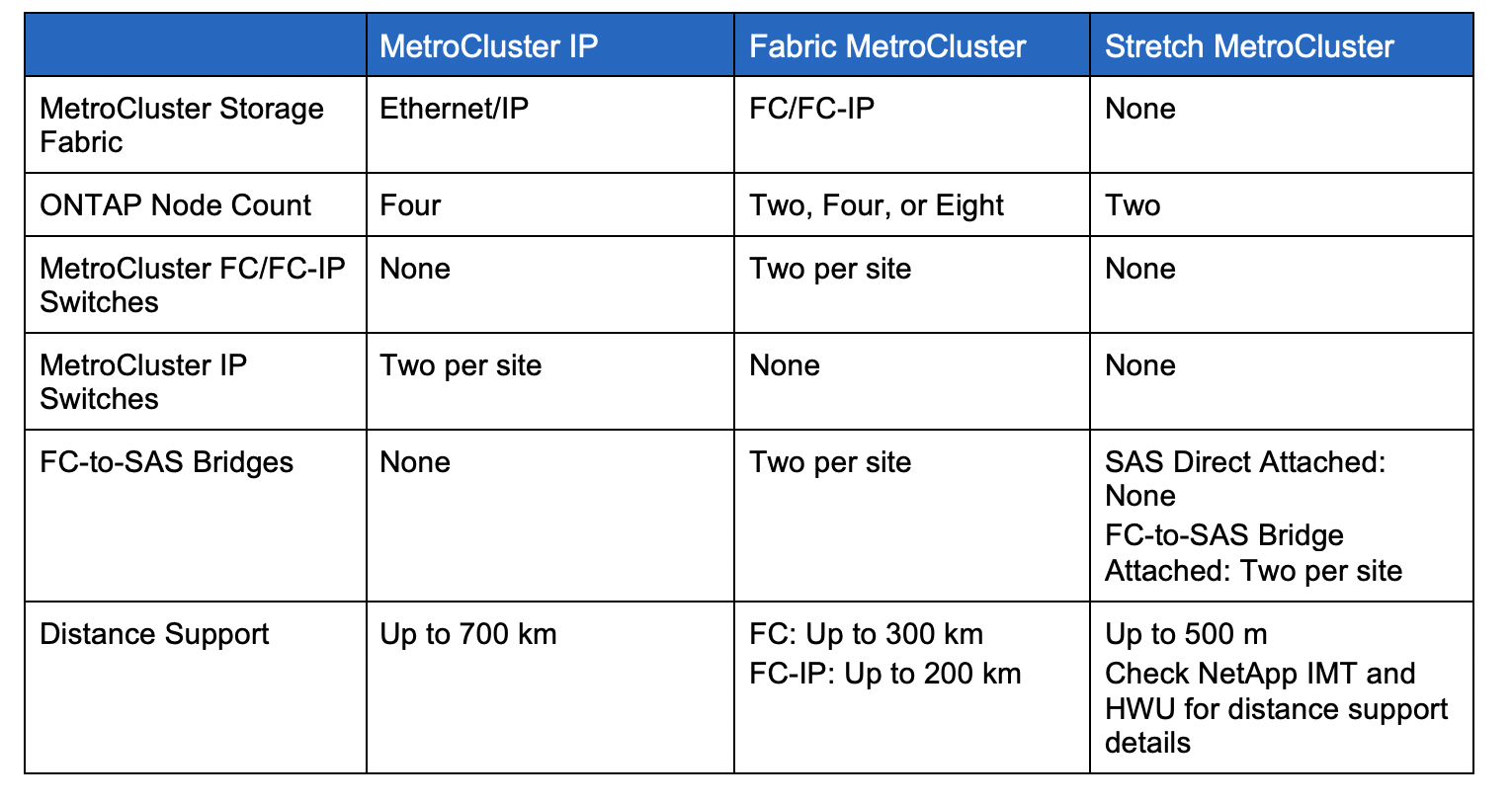

There are three main configurations for the ONTAP MetroCluster solutions, based on the storage back-end connectivity implementation between sites: MetroCluster IP, fabric MetroCluster, and stretch MetroCluster. Table 1 compares some of the differences between MetroCluster IP and the other two solution configurations.

Table 1) ONTAP MetroCluster solution configurations.

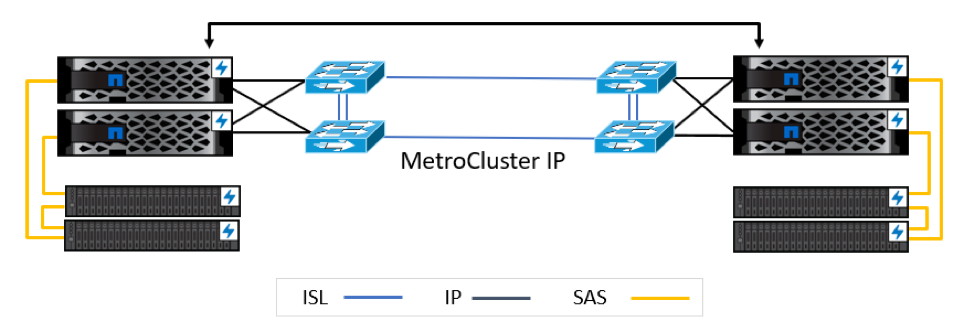

The MetroCluster IP solution uses a high-performance Ethernet storage fabric between sites. This is the latest addition to the MetroCluster solution portfolio to take advantage of high-speed Ethernet networks. The 4-node MetroCluster IP solution shown in Figure 3 offers a simple solution architecture, a low number of solution components, and support for up to 700 km between sites.

Figure 3) Four-node MetroCluster™ IP configuration.

Site Disaster and Recovery

To make sure that the solution is configured correctly and working properly, it is a best practice to simulate a site disaster situation by performing a MetroCluster switchover operation, or by introducing a simulated hardware failure, such as powering off the storage controllers for one site, to verify that data services can resume properly from the surviving site after a site failure.

After a switchover operation, all the client I/O is served from the surviving cluster at the remote site. Some recovery operations are necessary after a site disaster or a site disaster simulation, such as repairing or replacing failed hardware and resynchronizing data via the healing process. These procedures are necessary to bring the root and data aggregates in the clusters back in sync between the sites before switching the client I/O operations back to the original site via a switchback operation.

The NetApp Tiebreaker software can be deployed at a third site to help monitor the health of the two MetroCluster sites. It can also be configured to automatically perform a switchover operation. The Tiebreaker software helps to prevent the “split brain” scenario in which the two clusters both attempt to perform a switchover operation when they have lost communication with each other. The Tiebreaker software prevents this scenario by checking on the status of the two sites from a third location.

ONTAP 9.6 MetroCluster IP Features and Capabilities

Here are some highlights of the ONTAP 9.6 MetroCluster IP features and capabilities.

700 Km MetroCluster Distance Support

The MetroCluster IP solution design takes advantage of the high-speed Ethernet network storage fabric between the two MetroCluster sites to support a maximum site distance of up to 700 km.

Although increasing the distance between MetroCluster sites provides more choices for potential sites, it inevitably introduces latency for the client I/O as a result of the time needed for the I/O and electrical signals to travel across the physical distance between the sites for data mirroring and protection. A rule of thumb to keep in mind is that the round-trip latency increases by about 1 millisecond per 100 km distance between the sites.

Expanded Platform Support

In addition to supporting the AFF A300, A700, and A800 series and FAS8200 and FAS9000 series systems for MetroCluster IP deployment, ONTAP 9.6 also supports the deployment of MetroCluster IP solutions on the smaller footprint AFF A320, A220, and FAS2750 systems. These systems are very compact and cost effective to implement.

Lowered Operation Costs with Shared ISL

With ONTAP 9.6, instead of having dedicated connectivity between sites for the MetroCluster IP switches, the ISL ports on the MetroCluster IP switches can also be connected to an existing layer 2 IP network to help lower the cost of deploying and operating the MetroCluster IP solution.

However, for the MetroCluster solution to operate properly, the ISL and switches must meet the documented shared network requirements and best practices for latency, packet loss, bandwidth, quality of service, VLAN, MTU, traffic priority, congestion notification, and so on.

Simplified Operations

After a switchover operation—either negotiated or unscheduled—a healing process is needed to bring the root and data aggregates back in sync between the sites before the cluster can accept a switchback operation to return to a normal operational state.

For the MetroCluster IP configuration, automatic healing is initiated for both negotiated and unscheduled switchover operations in ONTAP 9.6 to help reduce the number of recovery steps. Another operational simplification is the ability to use OnCommand® System Manager to manage and upgrade the ONTAP MetroCluster configurations.

Example FlexPod MetroCluster IP Solution with AFF A320 and Shared ISL

With ONTAP 9.6, the MetroCluster IP switches can be connected to an existing layer 2 IP network between the two sites and used for the MetroCluster IP traffic (if the existing layer 2 IP network meets the documented requirements). This approach can save on the costs of deployment and operations.

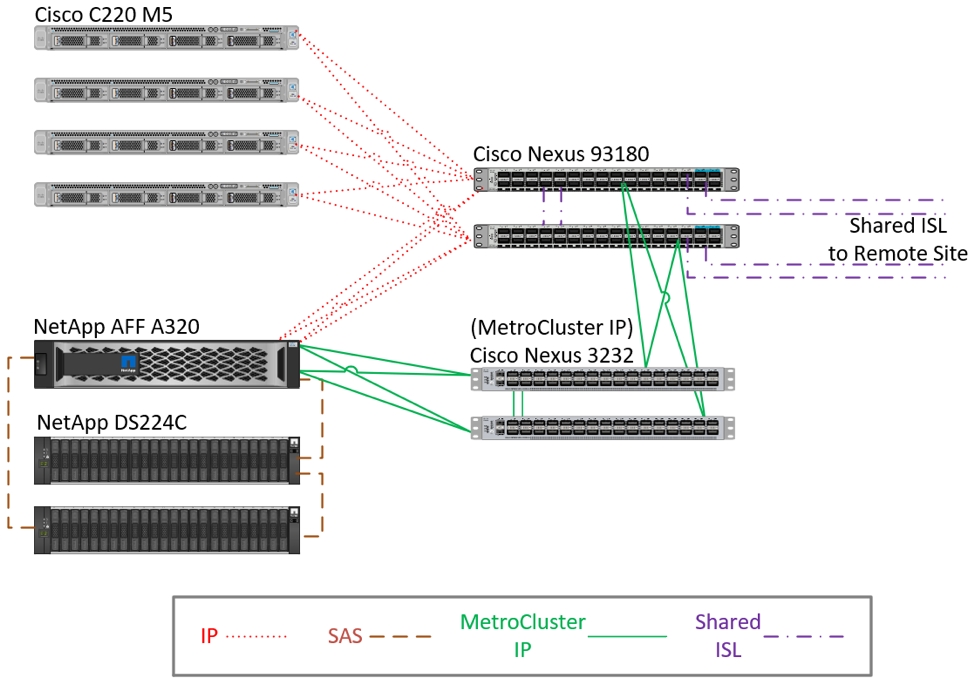

The FlexPod MetroCluster IP solution example illustrated in Figure 4 takes advantage of the shared layer 2 site-to-site connectivity and the AFF A320 storage. This solution architecture looks very similar to a traditional FlexPod configuration with Cisco C220 series servers, Nexus 93180 switches, and NetApp AFF A320 storage controllers. The only difference is the addition of the Cisco Nexus 3232 MetroCluster IP switches and the deployment of the same infrastructure at two sites to build a FlexPod MetroCluster IP solution that spans the two sites to protect data against single-site failure.

Figure 4) Example FlexPod MetroCluster™ IP with AFF A320 and shared ISL configuration at one site.

Conclusion

There are many supported FlexPod and ONTAP MetroCluster IP configurations that can be implemented together to create a FlexPod MetroCluster IP solution that is highly reliable and highly available to survive a single-site failure scenario and achieve the desired business continuity objectives.

The MetroCluster IP configuration takes advantage of the high-speed Ethernet network-based ISL and can support up to 700 km distance between sites for data mirroring and protection. With ONTAP 9.6, the MetroCluster IP solution can also be deployed on the space-efficient and lower-cost AFF A320, A220, and FAS2750 systems.

In addition, the newly supported shared layer 2 network allows the solution to be deployed with an existing site-to-site network infrastructure that meets network and switch specifications and configuration requirements and characteristics. The operations of the MetroCluster IP configuration are simplified by the automatic healing feature, which can reduce the number of steps necessary to return to a normal operating state after a planned or unscheduled switchover operation.

FlexPod MetroCluster IP solutions with ONTAP 9.6 take advantage of the reliability and availability of the FlexPod design and ONTAP MetroCluster features and capabilities to protect business-critical data, ensure business continuity, and reduce risks from potential site power outages or other site failures. The Cisco Validated Design reference architecture, MetroCluster technical reports, ONTAP 9 documentations, Cisco HCL, and NetApp Interoperability Matrix and NetApp Hardware Universe are valuable resources for customers and partners in their design and implementation of a highly scalable, highly reliable, and highly available FlexPod MetroCluster IP solution.

Where to Find More Information

Cisco FlexPod Design Guides

Cisco UCS Hardware and Software Compatibility

Cisco Validated Design Overview

FlexPod Datacenter with NetApp ONTAP 9.6, Cisco UCS 4th Generation, and VMware vSphere 6.7 U2

NetApp Hardware Universe (HWU)

NetApp Interoperability Matrix Tool (IMT)

ONTAP 9 Documentation Center

ONTAP 9 MetroCluster IP Installation and Configuration Guide

ONTAP 9 MetroCluster Management and Disaster Recovery Guide

TR-4036 FlexPod Datacenter Technical Specifications

TR-4689 MetroCluster IP Solution Architecture and Design

TR-4705 NetApp MetroCluster Solution Architecture and Design

VMware vSphere 5.x and 6.x support with NetApp MetroCluster (2031038)

Jyh-shing Chen

Jyh-shing Chen is a Senior Technical Marketing Engineer with NetApp. His current focus is on Converged Infrastructure solution enablement, validation, and deployment / management simplification with Ansible automation. Jyh-shing joined NetApp in 2006 and worked in several other areas previously, including storage interoperability with Solaris and VMware vSphere operating systems, qualification of ONTAP MetroCluster solutions and Cloud Volumes data services. Before joining NetApp, Jyh-shing’s engineering experiences include software and firmware development on cardiology health imaging system, mass spectrometer system, Fibre Channel virtual tape library, and the research and development of microfluidic devices. Jyh-shing holds B.S. and M.S. degrees from National Taiwan University, PhD from Massachusetts Institute of Technology, and MBA from Meredith College.